Extracting structured information from documents with LLMs

The code for this blog can be found in this repository.

The progress around LLMs has been amazing, and I'm always thrilled to see releases that are making it easier for developers to work with these powerful tools. At beginning of this year, I worked on a project where I needed to extract structured information from a bunch of documents (think of PDFs, Word documents etc.) by using ChatGPT. What I found was that using ChatGPT required some creative problem-solving and scripting.

The structure problem

As we will see in this blog, LLMs are great at converting unstructured documents into structured pieces of information, but (until recently!) it was quite difficult to ensure they adhere to the structure you provide them with. Let's illustrate the problem with a small example.

We could throw an invoice document into ChatGPT and ask it to return us the following JSON object.

{

"company": "John Smith",

"email": "john@example.com",

"invoiceId": "INVO-005"

}

However, ChatGPT would return broken or malformed JSON, causing our upstream system to fail. My team spent countless hours developing a retry mechanism that would validate the output against a specific JSON schema - if it didn't match, we'd ask ChatGPT to correct its response. While this approach worked well for our needs, we knew we weren't alone in facing these issues.

In the meantime, a plethora of libraries appeared each attempting to address the problem. Maintaining all of this is a massive engineering effort that OpenAI whisked away by releasing structured output. With this feature, developers can now pass a JSON schema and get back a response that conforms to it - it's a major time-saver for those working with ChatGPT!

Structured outputs are also available via Azure OpenAI service.

Structured outputs

Here is our task for today - I've extracted a few of my invoices from Foodpanda, a popular food delivery app. I've been thinking about analysing my delivery habits, so I'll ask ChatGPT to extract parts of these documents into JSON which then can be used for further processing.

In our simplified document processing pipeline, we extract the text of each invoice into one big string. Behind the scenes, the [few-shot prompting technique] along with an invoice example in the prompt steers the model toward the correct output.

This line is where the structured output magic happens. The provided Pydantic schema enforces ChatGPT to return a response in the exact format we specified. To keep our prompt in sync with the schema, an example invoice is inserted in the prompt. In scenarios where we add a new field or delete an existing one inside the schema, Python will warn us that our example within the prompt needs to be updated as well.

As a nice side effect, our upstream code also knows what kind of object it can work with, thanks to the Python type hints.

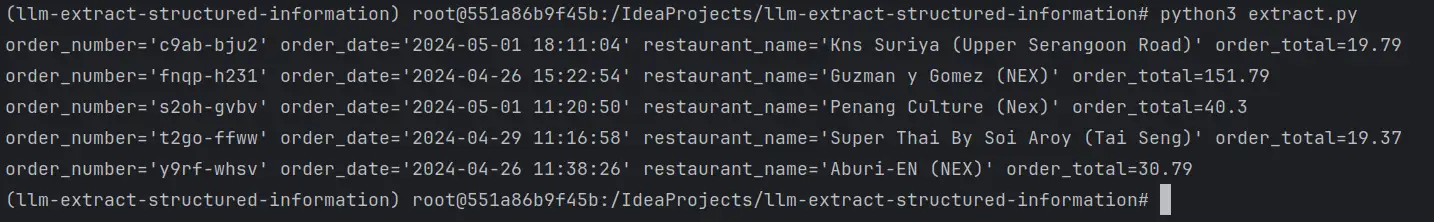

Run python3 extract.py and voila!

Production-ready structured outputs

Our toy project worked marvelous with one big prompt. In my current workplace at Freeday we process tens of thousands of documents with varying sizes so having a big prompt would not scale well. When it comes to building robust production systems, here are some points to take into consideration:

- The system currently takes in the whole invoice with a lot of unnecessary information. Identifying which pages are relevant, removing the clutter, etc. are things that can be baked into the document processing pipeline. Of course, these steps vary highly based on your documents, but investing effort in this area brings financial costs down due to fewer tokens in the prompt and peaks in performance because the LLM works faster with fewer tokens

- Smaller prompts are more suitable for LLM since it has less context to work with. When extracting 10-20 fields, a bigger prompt is harder to cope with and the LLM might omit some fields. Breaking this into smaller, discrete tasks such as having one prompt that focuses on getting the invoice number and the date, another one that only extracts the invoice items etc. will improve accuracy. Combining this technique with a document pipeline that gives the relevant pieces of documents can go a long way

Competition or not?

Claude is a well-known player in the LLM field. Competition is always great, but in this particular segment, I'm afraid Claude is lagging. They provide JSON mode which produces JSON, but doesn't guarantee structure. This is exactly what ChatGPT had, a predecessor of structured outputs. Claude is an outstanding model, we run it in production together with ChatGPT and I hope they step up their game.

The future direction

A glimpse into the toolbox of a modern engineer will uncover impressive tools such as compilers, languages, frameworks, IDEs, etc. On the other hand, LLMs seem quite poor in what they can output - plain strings. Don't get me wrong, strings are great, especially when they are backed by a sheer power of AI.

For me, structured outputs represent a breakthrough – it's a stepping stone for greater advancements in the world of software development. By providing a standardised framework for working with LLMs, we're finally able to build on top of them with confidence. Building software is always a challenge, but at least now I feel I can express better. I'm excited to see what kind of other tools will emerge in the future.